Data Lake vs. Data Warehouse: 2025 Guide for US Businesses

Choosing between a data lake and a data warehouse for US businesses in 2025 requires understanding their distinct roles in data management, impacting analytical capabilities and strategic decision-making.

In the rapidly evolving digital landscape of 2025, US businesses face a pivotal decision regarding their data infrastructure. The choice between a data lake vs data warehouse is no longer just a technical consideration; it’s a strategic imperative that dictates a company’s ability to derive insights, foster innovation, and maintain a competitive edge. Understanding the fundamental differences, strengths, and ideal use cases for each is critical for optimal data management and sustained growth.

Understanding the Core Concepts: Data Lake and Data Warehouse

At their essence, both data lakes and data warehouses serve as repositories for an organization’s data. However, their design philosophies, data structures, and primary objectives diverge significantly, leading to distinct applications in the modern business environment. A clear understanding of these foundational differences is the first step toward making an informed decision.

The Data Warehouse: Structured and Purpose-Built

A data warehouse is a centralized repository of integrated data from one or more disparate sources. It stores current and historical data in one single place that is used for creating analytical reports for workers throughout the enterprise. The data in a data warehouse is highly structured and organized, optimized for fast querying and reporting.

- Structured Data: Primarily handles relational data from operational systems.

- Pre-defined Schema: Data is transformed and cleaned before storage, conforming to a strict schema.

- Business Intelligence: Ideal for traditional BI, executive dashboards, and regulatory reporting.

- Optimized for Queries: Designed for efficient SQL queries and rapid data retrieval.

Its strength lies in providing a single source of truth for critical business metrics, ensuring consistency and reliability across reports. This makes it invaluable for historical analysis and understanding past performance.

The Data Lake: Raw, Flexible, and Scalable

A data lake, conversely, is a vast, centralized repository that holds a massive amount of raw data in its native format until it’s needed. This includes structured, semi-structured, and unstructured data. Data lakes prioritize flexibility and scalability, allowing organizations to store virtually any type of data for future analytical needs.

- Raw Data: Stores data in its original format, without prior transformation.

- Schema-on-Read: Schema is applied when data is read, not when it’s written.

- Advanced Analytics: Supports machine learning, predictive analytics, and real-time processing.

- Cost-Effective Storage: Often leverages commodity hardware and cloud storage, making it economical for large volumes.

The flexibility of a data lake makes it a powerful tool for exploratory analytics, data science initiatives, and discovering new patterns that might not be evident with structured data alone. It’s built for the unknown, for future questions that haven’t even been formulated yet.

In summary, while both serve data storage, the data warehouse offers a refined, structured view for established business questions, whereas the data lake provides a raw, expansive pool for emergent analytical challenges and large-scale data exploration.

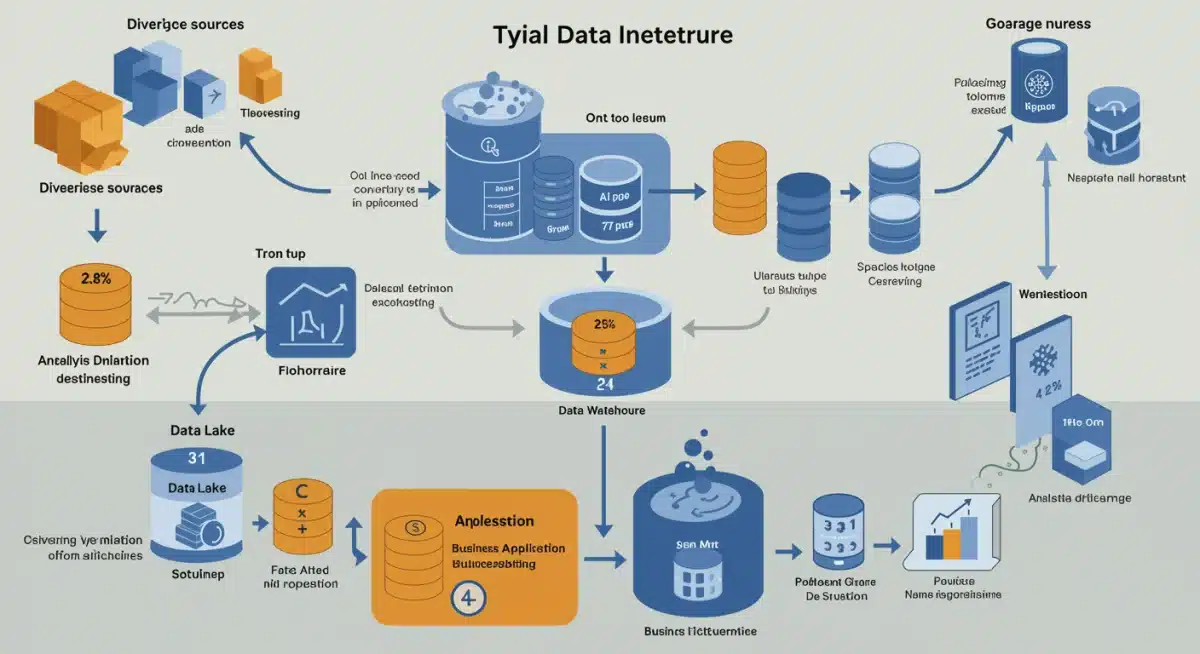

Key Differences in Data Management and Architecture for 2025

As US businesses navigate the complexities of data in 2025, understanding the architectural and management distinctions between data lakes and data warehouses becomes paramount. These differences directly impact how data is collected, stored, processed, and ultimately leveraged for business insights. The choice often reflects an organization’s strategic analytical goals and its appetite for data flexibility versus rigid structure.

Schema and Data Preparation

The approach to schema and data preparation is perhaps the most fundamental differentiator. A data warehouse adheres to a ‘schema-on-write’ principle. Data is meticulously cleaned, transformed, and structured according to a predefined schema *before* it is loaded into the warehouse. This ensures data quality and consistency, making it reliable for critical business reporting. This process can be time-consuming but guarantees clarity for downstream consumption.

In contrast, a data lake employs a ‘schema-on-read’ approach. Data is ingested in its raw format, without prior transformation or adherence to a fixed schema. The schema is defined and applied only when the data is queried or analyzed. This flexibility allows for rapid data ingestion and accommodates diverse data types, but it places a greater burden on data consumers to understand and prepare the data for their specific use cases.

Data Types and Sources

Data warehouses traditionally excel at handling structured, relational data from operational databases, CRM systems, and ERP platforms. They are optimized for quantitative analysis and reporting on well-defined business metrics. Their strength lies in integrating disparate structured sources into a cohesive view.

Data lakes are designed to ingest and store all forms of data: structured, semi-structured (like JSON, XML, logs), and unstructured (like text documents, images, video, audio, IoT sensor data). This broad capability makes them ideal for organizations dealing with a high volume and variety of data sources, especially those looking to leverage emerging data types for advanced analytics and artificial intelligence applications.

Processing and Performance

Data warehouses are typically optimized for Online Analytical Processing (OLAP) queries, which involve complex aggregations and joins across large datasets. They are designed for fast query performance for predefined reports and dashboards, often using highly optimized indexing and columnar storage. Performance is consistent and predictable for established analytical patterns.

Data lakes, due to their raw data storage, often rely on distributed processing frameworks like Apache Spark or Hadoop for analysis. While they can handle immense volumes of data and complex computations, query performance can vary depending on the data format, the complexity of the query, and the processing engine used. They are better suited for exploratory data science, machine learning model training, and batch processing rather than real-time, ad-hoc reporting on structured data.

These architectural choices directly influence the operational efficiency and analytical capabilities of a business. A data warehouse provides stability and clarity for known business questions, while a data lake offers unparalleled agility and breadth for exploring new data frontiers.

Use Cases and Business Insights: When to Choose Which

The decision between implementing a data lake or a data warehouse, or even a hybrid approach, largely hinges on the specific business insights an organization aims to achieve. Each solution caters to different types of analytical needs and operational requirements. Understanding these distinct use cases is crucial for US businesses to optimize their data strategy in 2025.

Ideal Scenarios for a Data Warehouse

Data warehouses are the backbone for traditional business intelligence (BI) and reporting. They are best suited for situations where data consistency, accuracy, and historical analysis of structured data are paramount. Consider a data warehouse when your business needs:

- Standardized Reporting: Generating regular reports on sales performance, financial results, or operational efficiency.

- Historical Analysis: Understanding trends over time, such as year-over-year growth or customer behavior patterns.

- Regulatory Compliance: Ensuring data integrity and auditability for compliance with industry regulations.

- Pre-defined Metrics: When key performance indicators (KPIs) are well-established and require consistent measurement.

For example, a retail company might use a data warehouse to analyze quarterly sales figures across different product lines and regions, providing a clear, consistent view of business health to executives. The structured nature of the data warehouse ensures that all reports draw from the same, validated source.

Ideal Scenarios for a Data Lake

Data lakes shine in environments where flexibility, scalability, and the ability to process diverse, raw data are critical. They are the preferred choice for advanced analytics, machine learning, and exploratory data science initiatives where the data’s ultimate value might not yet be fully understood. Opt for a data lake when your business aims for:

- Advanced Analytics & Machine Learning: Building predictive models, recommendation engines, or fraud detection systems using various data types.

- IoT Data Processing: Ingesting and analyzing real-time sensor data from connected devices for operational insights or preventive maintenance.

- Exploratory Data Science: Allowing data scientists to experiment with raw data to uncover new patterns and opportunities.

- Unstructured Data Analysis: Gaining insights from customer reviews, social media feeds, call center recordings, or video content.

A healthcare provider, for instance, might leverage a data lake to combine patient records, medical images, genomic data, and wearable device data to develop personalized treatment plans or identify disease outbreaks earlier. The data lake’s ability to store and process this diverse, high-volume data is indispensable for such complex analyses.

The Hybrid Approach: Data Lakehouse

Increasingly, organizations are adopting a hybrid architecture, often referred to as a ‘data lakehouse.’ This model attempts to combine the best features of both: the flexibility and scalability of a data lake with the data management and ACID (Atomicity, Consistency, Isolation, Durability) transactions of a data warehouse. This approach offers a compelling solution for businesses that need both robust BI reporting and advanced, flexible analytics on diverse data.

The strategic choice depends on current analytical maturity, future data ambitions, and the specific business problems you are trying to solve. For many US businesses in 2025, a multi-faceted approach leveraging the strengths of each system will likely yield the most comprehensive and impactful insights.

Implementation Considerations for US Businesses in 2025

Implementing a data lake or data warehouse, or a combination thereof, involves more than just selecting technology; it requires careful planning around infrastructure, data governance, and organizational capabilities. US businesses in 2025 must consider several critical factors to ensure a successful deployment that aligns with their strategic objectives.

Infrastructure and Cloud Adoption

The vast majority of new data lake and data warehouse implementations in 2025 are cloud-native. Cloud platforms like AWS, Azure, and Google Cloud offer scalable, cost-effective infrastructure that can dynamically adjust to data volume and processing demands. For data warehouses, cloud offerings like Amazon Redshift, Azure Synapse Analytics, and Google BigQuery provide managed services that simplify deployment and maintenance. For data lakes, cloud object storage services (S3, Azure Data Lake Storage, Google Cloud Storage) combined with serverless computing and distributed processing engines are common.

Choosing the right cloud provider and services depends on existing infrastructure, vendor lock-in concerns, and specific performance requirements. It’s essential to evaluate pricing models, integration capabilities, and the ecosystem of tools offered by each provider.

Data Governance and Security

Data governance is critical for both data lakes and data warehouses, but the approach differs. In a data warehouse, governance is often baked into the schema and ETL processes, ensuring data quality and access controls from the outset. For data lakes, governance can be more challenging due to the raw, diverse nature of the data. Implementing robust data cataloging, metadata management, and access control policies (e.g., role-based access, encryption) is vital to prevent data swamps and ensure compliance with regulations like CCPA and HIPAA.

Security measures, including encryption at rest and in transit, network isolation, and identity and access management (IAM), must be rigorously applied to protect sensitive business and customer data, regardless of the chosen architecture.

Organizational Skills and Culture

The success of a data initiative is heavily dependent on the skills within an organization. Data warehouses typically require strong ETL developers, SQL experts, and BI analysts. Data lakes, on the other hand, demand data engineers, data scientists, and machine learning engineers proficient in programming languages like Python or R, and distributed computing frameworks.

Fostering a data-driven culture that encourages experimentation, collaboration between data teams and business stakeholders, and continuous learning is paramount. Investing in training and potentially hiring new talent with relevant skill sets will be a key differentiator for businesses looking to maximize their data investments.

These considerations are not merely technical hurdles but strategic investments that shape a company’s ability to harness the full potential of its data assets in a competitive 2025 market.

The Evolving Landscape: Data Lakehouse and Real-time Analytics

The data landscape is not static; it’s continuously evolving, driven by demands for faster insights, greater flexibility, and the ability to handle increasingly complex data types. In 2025, two significant trends are reshaping the way US businesses approach data management: the rise of the data lakehouse architecture and the increasing importance of real-time analytics. These developments often blur the traditional lines between data lakes and data warehouses, offering new paradigms for optimal data utilization.

The Emergence of the Data Lakehouse

As mentioned earlier, the data lakehouse represents a convergence, aiming to deliver the best of both worlds. It builds upon the open, flexible, and scalable storage of a data lake, typically using formats like Parquet or ORC on cloud object storage, and adds data warehousing capabilities like ACID transactions, schema enforcement, and robust metadata management. This allows organizations to perform both traditional BI and advanced analytics on a single platform, eliminating data duplication and simplifying data pipelines.

- Unified Platform: Consolidates data for diverse workloads, from BI to AI.

- ACID Transactions: Ensures data reliability and consistency, crucial for data integrity.

- Schema Enforcement: Provides governance and quality without sacrificing raw data access.

- Cost Efficiency: Reduces infrastructure complexity and data movement costs.

For US businesses, the data lakehouse offers a compelling solution to overcome the limitations of strictly separated data architectures, providing a more agile and comprehensive approach to data management. It allows data teams to work with raw, diverse data while still providing business users with reliable, structured data for reporting.

The Imperative for Real-time Analytics

In 2025, the ability to derive insights from data in near real-time is no longer a luxury but a competitive necessity. Businesses need to react instantly to market changes, customer behavior, and operational events. While traditional data warehouses are typically designed for batch processing, modern data architectures are increasingly incorporating real-time data ingestion and processing capabilities.

Data lakes, with their ability to ingest streaming data from various sources (e.g., IoT devices, clickstreams, social media feeds), are foundational to real-time analytics. When combined with stream processing technologies like Apache Kafka and Apache Flink, they enable businesses to perform:

- Real-time Fraud Detection: Identifying suspicious transactions as they occur.

- Personalized Customer Experiences: Delivering tailored recommendations or offers instantly.

- Operational Monitoring: Gaining immediate insights into system performance and potential issues.

- Dynamic Pricing: Adjusting prices based on real-time demand and competitor activity.

The integration of real-time capabilities into data lake and lakehouse architectures is empowering businesses to move from reactive analysis to proactive decision-making, driving immediate value and enhancing responsiveness in a fast-paced market.

These evolving trends highlight a shift towards more integrated, flexible, and responsive data platforms. US businesses that embrace these advancements will be better positioned to extract maximum value from their data assets and stay ahead of the curve.

Cost-Benefit Analysis: ROI for Data Lakes and Data Warehouses

When evaluating data infrastructure options, US businesses must conduct a thorough cost-benefit analysis to understand the return on investment (ROI) for both data lakes and data warehouses. While both represent significant investments, their cost structures and the value they generate can differ substantially, impacting long-term financial health and strategic advantage in 2025.

Cost Factors for Data Warehouses

Data warehouses, especially traditional on-premise solutions, historically involved substantial upfront costs for hardware, software licenses, and specialized personnel. While cloud data warehouses mitigate some of these initial expenses, ongoing costs include:

- Licensing Fees: For proprietary data warehouse software.

- ETL Tooling: Investment in robust ETL (Extract, Transform, Load) tools for data preparation.

- Storage and Compute: Scalable cloud resources, often with tiered pricing.

- Specialized Personnel: Data architects, database administrators, and BI developers.

- Maintenance: Ongoing upkeep, patching, and optimization.

The benefits, however, are clear: high data quality, consistent reporting, and reliable insights for critical business decisions. This leads to improved operational efficiency, better strategic planning, and reduced risks associated with inaccurate data, all contributing to a strong ROI for established analytical needs.

Cost Factors for Data Lakes

Data lakes often boast lower per-unit storage costs due to their reliance on commodity hardware or cloud object storage. However, their total cost of ownership (TCO) can be nuanced:

- Storage: Generally inexpensive for raw data storage.

- Processing: Costs associated with distributed computing frameworks (e.g., Spark clusters) which can scale up significantly.

- Data Ingestion: Tools and services for ingesting diverse data types.

- Data Governance: Investment in data cataloging, metadata management, and security, which can be complex.

- Skilled Workforce: Higher demand for data engineers and data scientists, who command premium salaries.

- Potential for ‘Data Swamps’: Without proper governance, a data lake can become a costly, unusable repository.

The ROI from a data lake often comes from unlocking new revenue streams through advanced analytics, predictive modeling, and AI applications, as well as gaining a deeper, more comprehensive understanding of customer behavior and market dynamics. These benefits, while potentially transformative, can be harder to quantify initially compared to the direct operational improvements from a data warehouse.

Hybrid Model (Data Lakehouse) Cost-Benefit

A data lakehouse aims to optimize costs by reducing data movement and duplication, leveraging open formats, and providing a unified platform. It can potentially offer a better balance of cost and benefit by:

- Consolidating Tools: Reducing the need for separate tools for BI and advanced analytics.

- Streamlining Operations: Simplifying data pipelines and management.

- Optimizing Storage: Storing raw data cost-effectively while providing structured views.

Ultimately, the ROI for any data infrastructure solution is tied to its ability to generate actionable insights that drive business value. US businesses must carefully weigh the costs against the specific analytical capabilities and strategic advantages each option provides in their unique operational context for 2025.

Future-Proofing Your Data Strategy: 2025 and Beyond

As US businesses look beyond 2025, the imperative to future-proof their data strategy becomes increasingly critical. The rapid pace of technological innovation, coupled with evolving business demands and regulatory landscapes, necessitates an adaptable and resilient approach to data management. This involves not just selecting the right tools today but building a framework that can evolve with tomorrow’s challenges.

Embracing Open Standards and Interoperability

A key aspect of future-proofing is to avoid vendor lock-in and embrace open standards. This applies to data formats, APIs, and processing frameworks. Using open data formats like Parquet or ORC in data lakes, and ensuring interoperability between different cloud services and on-premise systems, provides the flexibility to switch technologies or integrate new ones as business needs change. This reduces dependence on single vendors and allows businesses to leverage the best-of-breed solutions available in the market.

Furthermore, investing in data virtualization layers can create an abstraction between data consumers and the underlying storage, allowing for greater agility in modifying the data landscape without impacting downstream applications.

Prioritizing Data Literacy and Continuous Learning

Technology alone cannot future-proof a data strategy. The human element is equally, if not more, important. Businesses must invest in fostering data literacy across all levels of the organization, from executives to front-line employees. This means providing training, promoting a culture of data-driven decision-making, and empowering employees to ask questions and seek insights from data.

For data professionals, continuous learning is non-negotiable. The landscape of tools, techniques, and best practices in data engineering, data science, and machine learning is constantly evolving. Organizations that support their teams in acquiring new skills will be better equipped to adapt to future data challenges and innovations.

Scalability and Elasticity by Design

Designing data architectures with scalability and elasticity in mind from the outset is crucial. Cloud-native solutions inherently offer these capabilities, allowing businesses to scale compute and storage resources up or down based on demand. This prevents over-provisioning and ensures that the data infrastructure can handle unexpected spikes in data volume or analytical workloads without performance degradation or excessive costs.

The ability to adapt quickly to changing data volumes and processing needs will be a defining characteristic of successful data strategies in the years to come. This includes considering serverless architectures for event-driven data processing and leveraging containerization for consistent deployment of analytical applications.

By focusing on open standards, human capital development, and scalable architecture, US businesses can build a data strategy that is not only robust for 2025 but also resilient and adaptable for the challenges and opportunities of the future.

| Key Aspect | Description |

|---|---|

| Data Structure | Data Warehouses are highly structured; Data Lakes store raw, unstructured data. |

| Primary Use | Warehouses for BI & reporting; Lakes for advanced analytics & AI. |

| Flexibility | Lakes offer high flexibility; Warehouses prioritize data consistency. |

| Emerging Trend | Data Lakehouse combines the strengths of both for unified data management. |

Frequently Asked Questions about Data Lakes vs. Data Warehouses

A data warehouse stores structured, processed data for specific analytical purposes, typically for business intelligence. A data lake, conversely, stores raw, unprocessed data of all types (structured, semi-structured, unstructured) for future use, often for advanced analytics and machine learning, allowing for greater flexibility.

For small US businesses, a data warehouse or a smaller, cloud-based data lakehouse often provides more immediate value for traditional BI needs. Data lakes require more advanced technical expertise and may be overkill unless the business has diverse data types and specific advanced analytics goals from the outset.

Yes, they often do. Many organizations use a data lake as a landing zone for all raw data, with a data warehouse extracting and transforming relevant, structured data from the lake for specific business intelligence applications. This hybrid approach leverages the strengths of both systems effectively.

A data lakehouse is a new architecture that combines the flexibility and cost-efficiency of a data lake with the data management and ACID transaction capabilities of a data warehouse. It’s gaining popularity because it simplifies data architecture, reduces data duplication, and enables both traditional BI and advanced analytics on a single platform.

Managing a data warehouse typically requires SQL expertise, ETL development, and BI tool proficiency. A data lake often demands proficiency in distributed computing (e.g., Spark), programming languages (Python/R), and data science/machine learning concepts, alongside strong data engineering skills for managing raw, diverse data.

Conclusion

The choice between a data lake and a data warehouse is a strategic decision for US businesses in 2025, profoundly impacting their data management capabilities and analytical prowess. While data warehouses remain indispensable for structured, reliable business intelligence, data lakes offer unparalleled flexibility for advanced analytics and diverse data types. The emerging data lakehouse architecture provides a compelling middle ground, combining the best features of both. Ultimately, the optimal data strategy will depend on specific business needs, analytical maturity, and a clear vision for how data will drive future growth and innovation. By carefully evaluating their requirements and embracing evolving technologies, businesses can build a robust data infrastructure that delivers sustained competitive advantage.